Chess Cheaters Study by Chessable Research Award winner

The Chessable Research Awards for the Summer 2023 cycle had four winners, undergraduate student Michael Martins and graduate students Jordan von Hippel, Jérôme Genzling, and Jane Zhang.

In this guest blog post, Jordan von Hippel describes the chess cheating experiments that he and his principal advisor, Grandmaster David Smerdon, Ph.D., conducted with thousands of participants.

The Intricacies of Detecting Chess Cheaters: A Deep Dive into Expertise, Confidence, and Cheating

by Jordan von Hippel

Cheating and dishonesty occur on a grand scale at all levels of society. In fact, rough estimates indicate that people lie in one out of every five of their social interactions (DePaulo et al., 1996). Students cheat on their exams, individuals and businesses cheat on their taxes, corporations cheat on environmental and other regulations, jobseekers cheat with fake or aggrandised resumés, and, of course, people cheat in games. The costs of cheating are extremely difficult to measure. The 2022 Occupational Fraud report (Association of Certified Fraud Examiners, 2022) estimated that organisations lose 5% of their revenue to fraud, amounting to hundreds of billions of dollars each year. Beyond immediate financial or material losses, cheating erodes trust in institutions and individuals, creating a climate of scepticism and cynicism. It fosters unfair competition, devaluing genuine achievements and demotivating those who operate with integrity.

Chess: A Microcosm of Society’s Larger Issues

Chess, a game of strategy and intellect, serves as a fascinating lens through which to study the broader issues of cheating and deception. The game’s rich history, combined with its modern challenges, offers a unique platform for this exploration. Recent controversies, such as the one involving Grandmaster Hans Niemann and World Champion Magnus Carlsen, have brought the complexities of distinguishing genuine skill from potential cheating to the forefront.

The Literature: A Foundation for Exploration

The vast landscape of deception detection, from everyday lies to high-stakes fraud, underscores the challenges inherent in discerning truth from falsehood. While technology and algorithms have been harnessed to detect dishonesty in some domains, human expertise remains a primary tool in many areas, including the realm of chess. Yet, the literature suggests that even experts, whether in forensic science or law enforcement, often perform only marginally better than novices in detecting deception (Ekman & O’Sullivan, 1991; Van den Eeden et al., 2019; Vrij & Mann, 2001; Wright Whelan et al., 2014).

The Study: Two Experiments, One Goal

Our research was structured around two experiments, each designed to delve deeper into the intricacies of cheating in chess.

Experiment 1: The Chess Cheating Challenge

This preliminary study was primarily designed to provide materials for the main experiment. We conducted a private online double-round-robin chess tournament with eight players ranging from Elo 1700-2300. Players did not know the identities of the other participants. Games were played at 20 minutes + 20 seconds per move and followed FIDE rules, aside from the special provisions related to cheating. Specifically, we wanted to simulate a real-world environment in which cheaters sought assistance by way of a co-conspirator, who could occasionally transmit engine suggestions at critical moments (for example, this was the mode of cheating in the infamous French cheating scandal at the 2010 Olympiad).

Participants were informed before the tournament that, before the game, some players would be told that they had been assigned to be a ‘Cheater’ for the round. If a player was assigned to be a Cheater, we — myself and Dr Smerdon, my principal advisor — sent them engine suggestions during the game (the top Stockfish 10 suggestions at roughly depth=22), and we informed the players that we would be actively trying to help them win the game (i.e., we would send suggestions at what we deemed were critical moments). Players did not have to play the engine suggestions if they did not want to, and sometimes they preferred not to in order to avoid detection — we again chose this setup because it most closely mimics the sort of cheating that we are interested in understanding. At the end of each game, we asked players to estimate the probability that their opponent was cheating.

After the tournament was finished, the players participated in a ‘Complaint’ Stage. Each player could lodge a ‘Complaint’ against any of their opponents in any of the rounds if they believed their opponent was assigned to be a Cheater. Lodging a complaint was risky; the following penalties applied:

● If the complaint was false, the player had 1 point subtracted from their tournament total score.

● If the complaint was true, the player had 1 point added to their total score.

● In addition, if one of a player’s opponents made a complaint against the player in a game where the player was a ‘Cheater’, the player also had 1.5 points subtracted from their score.

These unique features ensured that players were incentivised to cheat without getting caught and to catch other cheaters. Players were paid per-point of their total score, so that prizemoney was determined by a player’s:

1. Score over the rounds

2. Ability to correctly detect Cheaters, and

3. Ability to avoid detection when they were a Cheater.

This setup allowed us to gather a rich dataset for the subsequent experiment. The 56 games produced 112 player-game observations (White and Black) that could be used in Experiment 2 for the cheat-detection survey.

Experiment 2: A Deep Dive into Chess Cheating Detection

While the preliminary experiment provided a foundation, Experiment 2 stands as the cornerstone of this thesis. Designed to directly address the research questions and hypotheses, this experiment promises not only to shed light on the primary concerns but also to uncover nuanced trends within the data.

The Survey Setup

The heart of Experiment 2 is an online survey meticulously crafted using Qualtrics. With over 10,000 chess players trying out their cheat-detection skills via our survey, the study has easily been the largest research experiment on chess players ever conducted. The survey was published online and made available in eight languages. Dr. Smerdon’s chess network, combined with promotions on leading platforms like Chess.com and various social media chess groups, ensured a diverse and extensive participant pool, from beginners to grandmasters (at least one of whom reached 2800+). Essential data points collected included participants’ chess ratings, chess experience, history of cheating in chess, any bans they might have faced due to cheating, and more.

The Experiment Mechanics

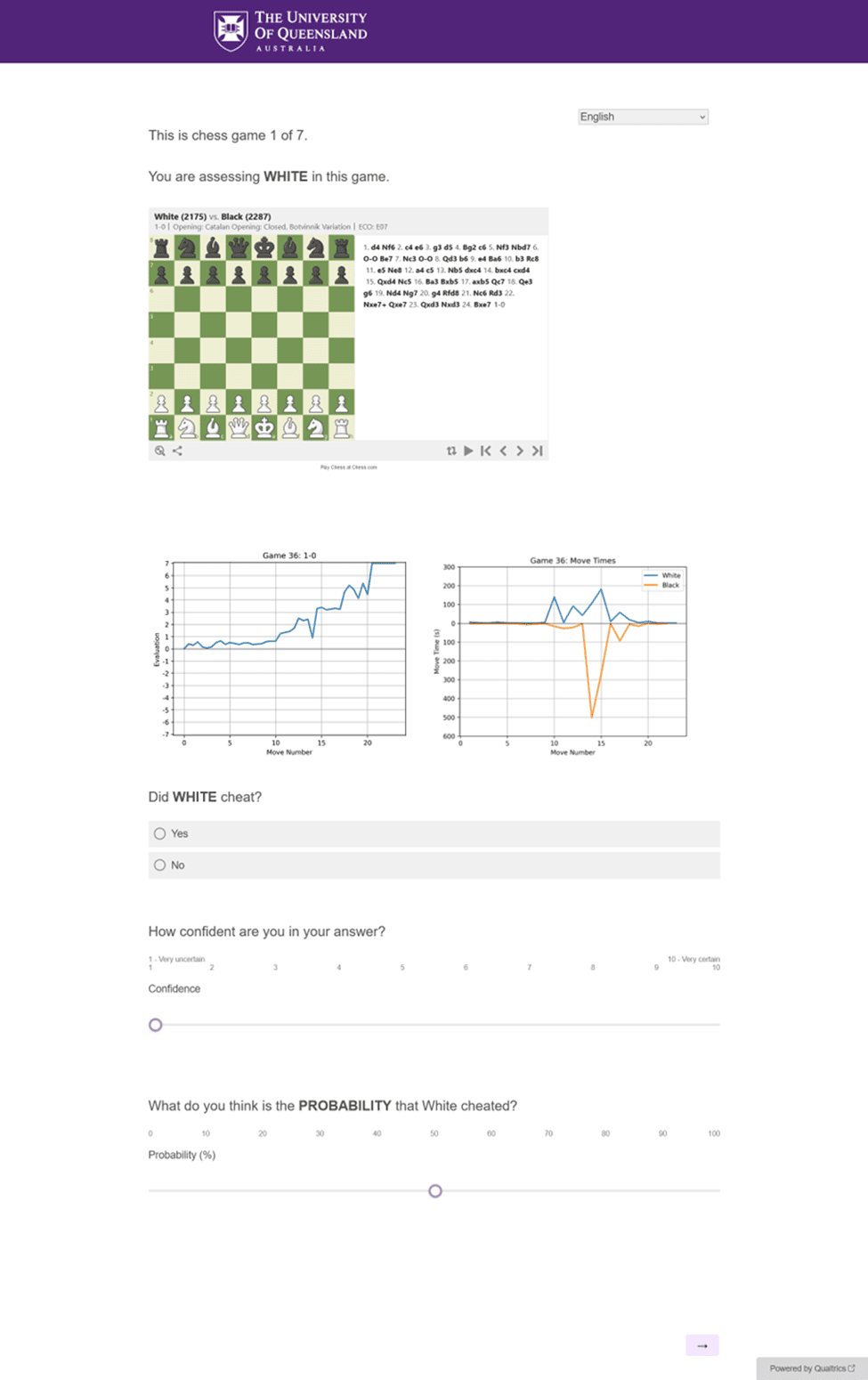

The games from Experiment 1 were repurposed for this study, with each game viewed from both the white and black player’s perspectives. From the 112 observations derived from these games, 72 were selected for Experiment 2. The selection process ensured a balanced representation of games where cheating occurred and where it didn’t. Each participant viewed one game from each of seven distinct groups, with the games randomized within each group (so, each participant saw a unique set of 7 games).

For every game, participants were tasked with determining if cheating occurred, gauging their confidence in their judgment, and estimating the probability of cheating.

Supporting Participants’ Judgments

To aid participants in their assessments, they were provided with:

– Modified FIDE ratings of both players, with a uniform shock distribution of ±50 applied to the actual ratings.

– An evaluation graph, a staple in the chess community, showcasing the advantage shifts throughout the game.

– A move time graph, illustrating the time taken for each move by the players.

An example of what participants saw is shown below.

Research Questions

Using Experiment 2, we want to answer the following questions:

Are experts better at detecting cheating than novices? Can people detect cheating above chance?

Previous literature, mainly in the fields of law and the police force, indicate that experts are either the same, or only slightly better, than laymen at detecting cheating. In addition, these studies found that experts either do not outperform chance or outperform it only marginally. Our study will be the first of its kind to assess these questions on cheating, rather than deception, using chess as our vehicle.

Does experience help with detecting cheating?

Related to, but distinct from, expertise is experience. We collected data on two types of experience in this study. The first is experience playing competitive chess, which, when controlling for expertise, can shed light on whether having more domain experience helps people detect cheating. The second type is experience with cheating. We asked players whether they had ever cheated, and whether they had ever been banned for cheating. While all answers in our survey are kept anonymous, it is of course possible (and likely) that this variable is underreported in our survey. However, with such a high-powered study, it will still be possible to test whether “it takes one to know one”: whether players who themselves cheated in the past are better at detecting cheating.

How related are confidence and accuracy in detecting chess cheaters?

A significant aspect of our study was examining the relationship between confidence and accuracy. Previous research indicates that people, even experts, are often overconfident in their judgments. This overconfidence can be seen across various domains, from general knowledge to eyewitness testimonies (Bornstein & Zickafoose, 1999; Sternglanz et al., 2019). Our research aimed to determine if this trend held true in the chess world, especially when it comes to detecting cheaters.

Results

The study is still ongoing at the time of writing this blog post, and so the preliminary results cannot yet be published as we don’t want to give future participants any information that previous participants did not have access to. At the time of writing this blog post, the main sample has over 10,000 participants. Respondents included beginners who had just started playing, all the way up to (almost) the very best players in the world. They hailed from almost every country and ranged from 10 years to 100 years of age. There are several other interesting secondary questions on our list, such as looking at the effects of age, gender, and nationality on cheating detection, as well as dividing our analysis by the number of “true hits” (correctly detecting a cheat) versus avoiding “false positives” (incorrectly accusing an innocent player). For these and other results, you’ll have to wait for the paper!

Leaderboard

All winners have been contacted to provide their bank details to receive their prize. If you have not been contacted, please email me: [email protected]

All prize-winners received a perfect 7/7 — 45 participants accomplished this feat. The winners can be seen on the leaderboard below. You can see the full leaderboard list here. For participants who consented to be on the leaderboard, their usernames (if provided) are shown.

Leaderboard: 7/7s

| Site | Username | Score |

|---|---|---|

| Anonymous | 7 | |

| Lichess | Tblrone | 7 |

| Lichess | js29 | 7 |

| Anonymous | 7 | |

| Lichess | Pzconte | 7 |

| Anonymous | 7 | |

| Lichess | NotKj | 7 |

| Anonymous | 7 | |

| Lichess | Sorensp | 7 |

| Chess.com | A-fier | 7 |

| Anonymous | 7 | |

| Chess.com | ANXIOUSFIST | 7 |

| Anonymous | 7 | |

| Lichess | Markus2709 | 7 |

| Chess.com | AshPlayer88 | 7 |

| Chess.com | mamboono5 | 7 |

| Chess.com | Chapa dog | 7 |

| Chess.com | Montypython | 7 |

| Chess.com | immatt64 | 7 |

| Chess.com | Tilt | 7 |

| Anonymous | 7 | |

| Anonymous | 7 | |

| Chess.com | PatchPlayer | 7 |

| Chess.com | SoyuzNerushimy | 7 |

| Chess.com | Amazedrenzme | 7 |

| Chess.com | ExactMrSmith | 7 |

| Chess.com | Breead2man | 7 |

| Chess.com | MPE37 | 7 |

| Chess.com | augustodemoraisrocha | 7 |

| Anonymous | 7 | |

| Chess.com | Artem_Kushchenko | 7 |

| Chess.com | ninchess04 | 7 |

| Chess.com | Motioncloud | 7 |

| Chess.com | SharpUniverse | 7 |

| Anonymous | 7 | |

| Chess.com | Anonymous | 7 |

| Lichess | walid_shebani | 7 |

| Chess.com | Lastdance45 | 7 |

| Chess.com | Bataja84 | 7 |

| Chess.com | Anonymous | 7 |

| Anonymous | 7 | |

| Chess.com | seantheprawn18 | 7 |

| Lichess | DarthPontus | 7 |

| Anonymous | 7 | |

| Anonymous | 7 |

References

Association of Certified Fraud Examiners. (2022). Occupational fraud 2022: A report to the nations [White paper]. https://legacy.acfe.com/report-to-the-nations/2022/

Bornstein, B. H., & Zickafoose, D. J. (1999). “I know I know it, I know I saw it”: The stability of the confidence–accuracy relationship across domains. Journal of Experimental Psychology: Applied, 5(1), 76.

DePaulo, B. M., Kashy, D. A., Kirkendol, S. E., Wyer, M. M. & Epstein, J. A. (1996). Lying in everyday life. Journal of Personality and Social Psychology, 70(5), 979.

Ekman, P., & O’Sullivan, M. (1991). Who can catch a liar?. American Psychologist, 46(9), 913.

Sternglanz, R. W., Morris, W. L., Morrow, M., & Braverman, J. (2019). A review of meta-analyses about deception detection. The Palgrave Handbook of Deceptive Communication, 303-326.

van den Eeden , C. A., de Poot, C. J., & van Koppen, P. J. (2019). The forensic confirmation bias: a comparison between experts and novices. Journal of Forensic Sciences, 64(1), 120-126.

Vrij, A., & Mann, S. (2008). Who killed my relative? Police officers’ ability to detect real-life high-stake lies. Psychology, Crime & Law, 7(2), 119-132.

Wright Whelan, C., Wagstaff, G. F. & Wheatcroft, J. M. (2014). High-stakes lies: Verbal and nonverbal cues to deception in public appeals for help with missing or murdered relatives. Psychiatry, Psychology and Law, 21(4), 523-537.

Interested in research?

The Chessable Research Awards are for undergraduate and graduate students conducting university-level chess research. Chess-themed topics may be submitted for consideration and ongoing or new chess research is eligible. Each student must have a faculty research sponsor. For more information, please visit this link.